What is Artificial Intelligence (AI)?

Artificial intelligence (AI) is a branch of technology that allows computers and machines to mimic human abilities such as learning, reasoning, problem-solving, decision-making, creativity, and autonomous action.

AI-powered applications and devices can recognize and interpret objects, understand and respond to human language, learn from new data and experiences, generate informed recommendations, and act independently without direct human intervention. A well-known example of this autonomy is the self-driving car.

As of 2024, much of the focus in AI research, development, and media coverage centers on generative AI (GenAI) — a powerful subset of AI that creates original text, images, videos, and other types of content. To fully grasp how generative AI works, it’s essential to understand its foundation technologies: machine learning (ML) and deep learning, which enable systems to learn patterns, improve over time, and generate increasingly sophisticated outputs.

Machine learning

Machine Learning (ML) is a core subset of artificial intelligence that focuses on building models by training algorithms to make predictions or decisions from data. Rather than being explicitly programmed for every task, ML systems learn from data patterns and improve over time.

Machine learning encompasses a wide variety of techniques and algorithms, including linear regression, logistic regression, decision trees, random forests, support vector machines (SVMs), k-nearest neighbors (KNN), clustering methods, and more. Each algorithm is designed to solve different types of problems depending on the nature and structure of the data.

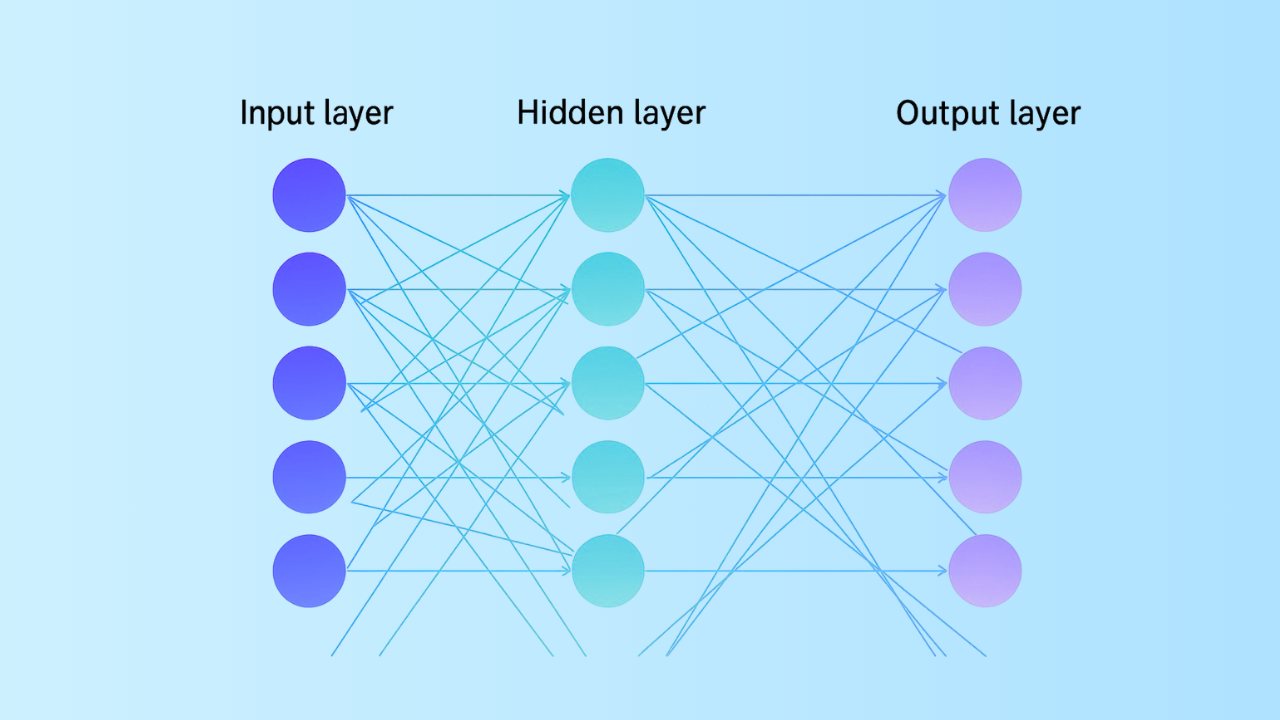

One of the most widely used approaches in machine learning is the neural network, also known as an artificial neural network (ANN). Inspired by the structure and function of the human brain, neural networks are made up of layers of interconnected nodes (similar to neurons). These networks excel at analyzing large, complex datasets and uncovering intricate patterns and relationships.

The most basic form of machine learning is supervised learning , where algorithms are trained on labeled datasets. Each training example is paired with the correct output label, enabling the model to learn the relationship between inputs and outputs. Once trained, the model can accurately classify new data or predict outcomes for previously unseen inputs.

Deep learning

Deep learning is an advanced subset of machine learning that leverages multilayered neural networks, known as deep neural networks, to more closely replicate the complex decision-making capabilities of the human brain.

Unlike traditional neural networks, which typically include only one or two hidden layers, deep neural networks contain an input layer, an output layer, and at least three — often hundreds — of hidden layers. These multiple layers allow the network to learn and extract increasingly abstract and sophisticated features from data as it passes through each layer.

Deep learning excels at handling large, unstructured, and unlabeled datasets, enabling a form of unsupervised learning where the model automatically identifies patterns and relationships without human guidance. Once trained, these models can make accurate predictions and classifications at remarkable speed and scale.

Because deep learning reduces the need for manual feature engineering, it enables machine learning on an unprecedented level. It is particularly well suited for applications such as natural language processing (NLP), computer vision, speech recognition, and other tasks that require precise pattern recognition in massive datasets. Today, deep learning is at the core of many AI-driven technologies we use daily — from virtual assistants and translation tools to facial recognition and recommendation systems.

Deep Learning Also Enables:

- Semi-Supervised Learning: Combines supervised and unsupervised approaches by using both labeled and unlabeled data to train models for classification and regression tasks.

- Self-Supervised Learning: Automatically generates labels from unstructured data, removing the need for manually labeled datasets while still providing effective supervisory signals.

- Reinforcement Learning : Trains models through trial-and-error, using reward and penalty signals to optimize decision-making rather than relying solely on pattern recognition.

- Transfer Learning: Leverages knowledge learned from one task or dataset to improve performance on a different but related task, reducing training time and data requirements.

What is Generative AI?

Generative AI , often referred to as "Gen AI", is a branch of artificial intelligence that uses deep learning models to create original content. These systems can generate long-form text, realistic images, videos, audio, and more — all in response to a user’s prompt or request.

At its core, a generative model learns a simplified representation of its training data. It then uses that representation to produce new content that is similar — but not identical — to the data it was trained on.

While generative models have been used for statistical data analysis for many years, the last decade has seen a significant evolution. They are now capable of analyzing and creating far more complex forms of data. This advancement has been driven by three major deep learning model types:

- Variational Autoencoders (VAEs): Introduced in 2013, VAEs enabled AI systems to generate multiple variations of content in response to prompts or specific instructions.

- Diffusion Models: First introduced in 2014, these models work by adding random noise to images until they are unrecognizable, then gradually removing the noise to produce new, original images based on the user’s request.

- Transformers: Designed to process and generate sequential data, transformers can create extended sequences of words, images, video frames, or even code. They are the foundation of many of today’s most popular Generative AI tools, including ChatGPT (GPT-4), Copilot, BERT, Bard, and Midjourney.

How Does generative AI works?

The Three Phases

Generative AI systems generally operate in three key phases: training, tuning, and generation with ongoing evaluation.

1. Training

The process begins with the creation of a foundation model — a deep learning model that serves as the backbone for multiple Generative AI applications. The most common foundation models today are large language models (LLMs) used for text generation, but there are also models designed for images, video, audio, music, and even multimodal models that handle several content types at once.

To build a foundation model, developers train a deep learning algorithm on massive volumes of unstructured and unlabeled data — often terabytes or even petabytes of text, images, and video from across the internet. This training process produces a neural network containing billions of parameters that encode entities, patterns, and relationships in the data, enabling the model to autonomously generate new content when prompted.

This training step is extremely compute-intensive, requiring thousands of clustered graphics processing units (GPUs), weeks of processing time, and often millions of dollars in infrastructure costs. Open-source foundation models — such as Meta’s LLaMA-2 — help developers bypass this expensive step by providing pre-trained models that can be adapted for new applications.

2. Tuning

Once the foundation model is trained, it must be tuned for a specific application or task. Tuning can be performed in several ways:

- Fine-Tuning: Feeding the model application-specific, labeled data — such as sample prompts, questions, and the correct responses — to improve accuracy and formatting.

- Reinforcement Learning with Human Feedback (RLHF): Human evaluators review the model’s responses, rating or correcting them so the model learns to generate more accurate, relevant outputs. This is commonly used in chatbots and virtual assistants.

3. Generation, Evaluation, and Continuous Tuning

After tuning, the model is deployed to generate content in response to real-world prompts. Developers and users continuously monitor its outputs, fine-tuning the model regularly — sometimes as often as weekly — to maintain or improve accuracy and relevance. The underlying foundation model, however, is updated far less frequently, often only once every 12 to 18 months.

A popular method for further improving output quality is Retrieval-Augmented Generation (RAG), which allows the model to access external, up-to-date data sources beyond its training set, resulting in more accurate and contextually relevant responses.

AI agents and agentic AI

An AI agent is an autonomous program capable of performing tasks and achieving goals on behalf of a user or another system — without requiring human intervention. AI agents can design their own workflows and leverage available tools, applications, or services to complete tasks efficiently.

Agentic AI refers to a system made up of multiple AI agents working together in a coordinated or orchestrated manner. This collaboration allows them to solve more complex problems or achieve larger goals than any single agent could accomplish alone.

Unlike traditional chatbots or AI models , which operate under predefined rules and often depend on human input, AI agents and agentic AI demonstrate true autonomy. They are goal-driven, adaptive to changing circumstances, and capable of acting independently. The terms "agent" and "agentic" highlight this capacity for purposeful, self-directed action.

One way to think about agents is as the natural evolution of generative AI . While generative AI models focus on producing content based on learned patterns, AI agents can take that content, interact with other agents or tools, make decisions, and complete tasks. For example, a generative AI app might simply tell you the best time to climb Mount Everest based on your schedule — but an AI agent could take this a step further by booking your flight and reserving a hotel in Nepal automatically.

Benefits of Artificial Intelligence

Artificial intelligence delivers significant advantages across industries and applications. Below are some of the most impactful benefits AI provides today:

- Automation of Repetitive Tasks

- Faster, Data-Driven Insights

- Enhanced Decision-Making

- Fewer Human Errors

- 24/7 Availability and Consistency

- Reduced Physical Risks

Automation of Repetitive Tasks

AI excels at handling routine and repetitive work, both digital and physical. It can collect, enter, and preprocess data automatically or perform physical tasks such as warehouse picking and manufacturing assembly. This automation frees humans to focus on higher-value, creative, and strategic activities.

Enhanced Decision-Making

Whether used for decision support or fully automated decision-making, AI provides faster, more accurate predictions and data-driven insights. Combined with automation, it allows businesses to seize opportunities or respond to crises in real time, often without requiring human intervention.

Fewer Human Errors

AI can help reduce errors by guiding users through proper processes, detecting potential mistakes before they occur, and even automating entire workflows. In critical fields like healthcare, AI-assisted surgical robotics can deliver consistent precision. Machine learning algorithms continuously improve their accuracy as they are exposed to more data, further minimizing the risk of errors.

24/7 Availability and Consistency

Unlike humans, AI systems do not tire and can operate continuously without breaks. Chatbots and virtual assistants provide round-the-clock customer support, while AI-driven production systems maintain consistent quality and output on manufacturing lines. This reliability helps reduce staffing pressure and ensures uniform performance.

Reduced Physical Risks

AI can take over hazardous tasks that would otherwise put human workers in danger — such as bomb disposal, working in deep oceans or space, or managing dangerous materials. Although still evolving, self-driving vehicles hold the potential to significantly reduce accidents and injuries, improving safety for passengers and drivers alike.

AI use cases

Artificial intelligence is applied across countless industries and scenarios. Below are just a few examples that highlight its potential and impact:

Customer Experience, Service, and Support

Businesses can deploy AI-powered chatbots and virtual assistants to manage customer inquiries, support tickets, and more. These tools leverage natural language processing (NLP) and generative AI to understand and respond to questions about order status, product details, return policies, and other common issues.

Chatbots and virtual assistants deliver round-the-clock support, provide quick answers to frequently asked questions (FAQs), free up human agents to focus on more complex problems, and ensure faster, more consistent service for customers.

Fraud Detection

Machine learning and deep learning algorithms can analyze transaction data and detect anomalies, such as unusual purchase behavior or login locations, that may signal fraudulent activity. This allows organizations to respond quickly, minimize losses, and provide customers with greater security and peace of mind.

Personalized Marketing

Retailers, financial institutions, and other customer-focused businesses use AI to deliver personalized marketing experiences and campaigns. By analyzing customer purchase history and behavioral data, deep learning models can recommend products and services that customers are most likely to want. AI can even generate tailored copy and special offers for each customer in real time, improving engagement, increasing sales, and reducing churn.

Human Resources and Recruitment

AI-powered recruitment platforms simplify the hiring process by screening resumes, matching candidates to job descriptions, and even conducting initial interviews through video analysis. These solutions significantly reduce the administrative burden of managing large candidate pools, shorten response times, and lower time-to-hire — ultimately creating a better experience for candidates, regardless of the outcome.

Application Development and Modernization

Generative AI and automation tools can accelerate software development by handling repetitive coding tasks and supporting the migration and modernization of legacy applications at scale. This improves code consistency, reduces errors, and speeds up development cycles, enabling teams to deliver solutions faster and more efficiently.

Predictive Maintenance

Machine learning models analyze data from sensors, Internet of Things (IoT) devices, and operational technology (OT) systems to predict when maintenance will be needed and detect potential equipment failures before they occur. AI-driven predictive maintenance helps minimize downtime, reduce costs, and proactively address supply chain issues before they impact business operations.

AI challenges and risks

Organizations are rapidly embracing artificial intelligence (AI) to unlock its many advantages and drive innovation. While this swift adoption is necessary to remain competitive, implementing and sustaining AI workflows also introduces a range of challenges and risks.

Data risks

One of the most pressing concerns is data risk. Since AI systems depend on large datasets, they are vulnerable to issues such as data poisoning, tampering, bias, and cyberattacks that may result in breaches. To mitigate these threats, organizations must safeguard data integrity and enforce strong security and availability measures across the entire AI lifecycle—from development and training through deployment and beyond.

Model risks

Another critical challenge involves model risk. Malicious actors can attempt to steal, reverse engineer, or manipulate AI models . By tampering with a model’s architecture, parameters, or weights—the very elements that determine accuracy and performance—attackers can compromise its reliability and effectiveness.

Operational risks

AI systems also face operational risks, including model drift, inherent bias, and weaknesses in governance structures. Without proactive monitoring and management, these risks can cause performance degradation, system failures, and open doors for cybersecurity threats.

Ethics and legal risks

Finally, organizations must address ethical and legal risks. Failure to prioritize safety, fairness, and transparency can result in privacy violations and discriminatory outcomes. For instance, training data with hidden biases could skew hiring models, reinforcing stereotypes and unfairly favoring specific demographic groups.

By recognizing and addressing these risks, organizations can strengthen trust, ensure compliance, and maximize the long-term value of AI technologies.

AI ethics and governance

AI ethics is a multidisciplinary field focused on maximizing the positive impact of artificial intelligence while minimizing risks and negative consequences. Its principles are put into practice through systems of AI governance, which establish guardrails to ensure that AI tools and applications are developed and used responsibly.

AI governance provides oversight mechanisms designed to identify and manage risks throughout the AI lifecycle. A truly ethical governance framework requires collaboration among diverse stakeholders—including developers, users, policymakers, and ethicists—to ensure that AI systems align with societal values and promote fairness, accountability, and safety.

Here are common values associated with AI ethics and responsible AI:

-

Explainability and Interpretability

As AI grows more advanced, it becomes harder for humans to understand how algorithms reach their results. Explainable AI provides processes and methods that enable users to interpret, trust, and comprehend the outputs generated by algorithms.

-

Fairness and Inclusion

Machine learning inherently involves statistical discrimination, but it becomes harmful when it systematically advantages privileged groups while disadvantaging others. To promote fairness, practitioners can reduce algorithmic bias during data collection and model design while also fostering more diverse and inclusive teams.

-

Robustness and Security

Robust AI systems are designed to handle abnormal inputs and malicious attacks without causing unintended harm. They also safeguard against vulnerabilities to ensure resilience against both intentional and unintentional interference.

-

Accountability and Transparency

Organizations should establish clear governance structures and assign responsibility for AI development, deployment, and outcomes. Users must be able to understand how AI systems function, evaluate their capabilities, and recognize their limitations. Greater transparency empowers users to make informed judgments about AI services.

-

Privacy and Compliance

Regulatory frameworks such as GDPR require organizations to uphold privacy standards when processing personal data. Protecting AI models that use sensitive information, carefully controlling input data, and building adaptable systems are essential to ensuring compliance with evolving regulations and ethical expectations.

Weak AI vs. Strong AI

To better understand the use of artificial intelligence at different levels of capability, researchers classify AI into distinct types based on its sophistication and complexity.

Weak AI, also referred to as “ narrow AI ,” describes systems built to perform specific tasks or sets of tasks. Examples include smart voice assistants such as Amazon Alexa and Apple Siri, social media chatbots, or the semi-autonomous driving features found in modern vehicles. These systems are highly effective within their programmed domains but lack the ability to generalize knowledge beyond them.

Strong AI, often called “artificial general intelligence” (AGI) or “ general AI ,” represents a more advanced concept. AGI would have the capacity to understand, learn, and apply knowledge across diverse tasks at a level equal to—or exceeding—that of humans. At present, this form of AI remains theoretical, with no existing system close to achieving it. Researchers suggest that realizing AGI, if it proves possible, would require significant breakthroughs in computing power and design. For now, truly self-aware AI, as often depicted in science fiction, remains speculative.

What is The History of Artificial Intelligence (AI)?

The concept of “a machine that thinks” can be traced back to ancient Greece. However, the modern evolution of artificial intelligence has been shaped by a series of milestones since the rise of electronic computing:

1950 Alan Turing publishes Computing Machinery and Intelligence. In this landmark paper, Turing—celebrated for breaking the German ENIGMA code during World War II and often regarded as the “father of computer science”—asks the question: “Can machines think?” He introduces what is now known as the Turing Test, in which a human interrogator attempts to distinguish between responses from a human and a computer. While debated extensively, the test remains a foundational idea in AI and philosophy.

1956 At the first AI conference held at Dartmouth College, John McCarthy coins the term artificial intelligence. McCarthy, who later invented the Lisp programming language, helped establish the field. That same year, Allen Newell, J.C. Shaw, and Herbert Simon developed the Logic Theorist, the first AI program.

1967 Frank Rosenblatt builds the Mark 1 Perceptron, the first neural-network-based computer capable of learning through trial and error. In 1968, Marvin Minsky and Seymour Papert publish Perceptrons, a seminal work on neural networks. While influential, the book also led to a temporary decline in neural network research.

1980 The backpropagation algorithm, a method for training neural networks, gains widespread use, marking a breakthrough for AI applications.

1995 Stuart Russell and Peter Norvig publish Artificial Intelligence: A Modern Approach, which quickly becomes a definitive textbook in AI education. It introduces four perspectives for defining AI, distinguishing systems by whether they are designed to think or act rationally.

1997 IBM’s Deep Blue defeats world chess champion Garry Kasparov in a historic match and rematch, demonstrating AI’s growing computational power.

2004 John McCarthy publishes What Is Artificial Intelligence?, offering one of the most frequently cited definitions of AI. By this time, the rise of big data and cloud computing is enabling organizations to manage increasingly vast datasets, laying the groundwork for modern AI training.

2011 IBM’s Watson® defeats Jeopardy! champions Ken Jennings and Brad Rutter, showcasing AI’s potential in natural language processing. Around this period, data science emerges as a distinct and rapidly growing discipline.

2015 Baidu’s Minwa supercomputer leverages convolutional neural networks (CNNs) to classify images with greater accuracy than humans, marking a leap in deep learning.

2016 DeepMind’s AlphaGo, powered by advanced deep neural networks, defeats Go world champion Lee Sedol in a five-game match. The achievement is notable given Go’s extraordinary complexity, with over 14.5 trillion possible positions after only four moves. Google later acquires DeepMind for approximately USD 400 million.

2022 Large language models (LLMs), such as OpenAI’s ChatGPT, redefine AI performance and enterprise potential. Generative AI , enabled by deep learning and massive pretraining datasets, ushers in a new era of capability.

2024 AI enters a new phase of innovation. Multimodal models capable of processing multiple data types—such as text, images, and speech—deliver richer, more versatile experiences by combining natural language processing and computer vision. At the same time, smaller, more efficient models are gaining traction as an alternative to increasingly large and resource-intensive systems.

Edit Profile

Help improve @KR

Was this page helpful to you?

Contact Khogendra Rupini

Are you looking for an experienced developer to bring your website to life, tackle technical challenges, fix bugs, or enhance functionality? Look no further.

I specialize in building professional, high-performing, and user-friendly websites designed to meet your unique needs. Whether it’s creating custom JavaScript components, solving complex JS problems, or designing responsive layouts that look stunning on both small screens and desktops, I can collaborate with you.

Create something exceptional with us. Contact us today

Open for Collaboration

If you're looking to collaborate, I’m available for a variety of professional services, including -

- Website Design & Development

- Advertisement & Promotion Setup

- Hosting Configuration & Deployment

- Front-end & Back-end Code Implementation

- Code Testing & Optimization

- Cybersecurity Solutions & Threat Prevention

- Website Scanning & Malware Removal

- Hacked Website Recovery

- PHP & MySQL Development

- Python Programming

- Web Content Writing

- Protection Against Hacking Attempts

.png)

.png)